Analysis and Modeling Exercises

AME Guidelines

We’ll engage in 5 analysis and modeling exercises (AMEs). These exercises address a major goal of the course: developing skills in quantitative inference in the context biological sciences.

Some specific themes we’ll explore include:

- Building and applying predictive models to biological phenomena.

- Effective visualization of trends in research data.

- Qualitative and statistical inference of biological data.

Every 2-3 weeks or so, we’ll introduce the concepts and task taken up by each AME. Unlike the mini-projects, AMEs are focused mainly on quantitative approaches to biomechanical research, not the bio-physical concepts that we explore elsewhere.

AMEs will take about a week to complete. At least initially, these exercises will be self-assessed. That is, after completion and submission of each AME, solutions will be issued and students will have about another week to compare their submitted work to the solution and resubmit a revised AEM with a self-assessed preliminary score. The original submission, revised submission, and self-assessed score will then be review by Prof. K, who will, in short order, return the self-assessed AEM with some feedback and a final score.

A hypothetical example of an AME can be found at this link.

Initial submissions

AMEs will be released on Tuesdays and are due the following Tuesday. Each AEM will include a list of objectives with a total point value of 8–9 points. An interpretation section in each AEM will be worth 1–2 points.

AMEs are meant to be short, concise explorations of quantitative topics relevant to our experimental work. Initial submissions should should be contained in an R markdown file and follow this format:

- The following section headers that organize your submission.

Analysis: A list of objectives and the relevant analysis to address them. This section can be established from pasting the objectives put forth in AME assignment description. Each objective should be accompanied by a code chunk that meets that specific objective. This may include, among other things, loading data, implementing models, and producing illuminating visualizations. NB: Each code chunk must be given a name that corresponds its objective, e.g., “objective 1”.

Interpretation: A brief statement as to what the analysis means with reference to the objectives. This should be no longer than three short sentences.

Initial AME markdowns should be submitted through a Google form, the link to which will be provided in the AME assignment description.

This link leads to a hypothetical submission for our hypothetical AME.

Self-Assessment and Revision Procedure

Immediately following the deadline for initial submission, Prof. K will release the analytical solution. Students will then have one week to compare their initial submission with the solution, make a self-assessment, and prepare a revised markdown.

A solution to our hypothetical AEM can be found here

Self-Assessment

The solution released by Prof. K will contain a link to a self-assessment form where students can enter marks for each objective and the interpretation section.

Answering most objectives in the AME will require code to analyze, visualize, or summarize data. In self-assessing these objectives, students should consider the following:

Compared to the solution . . .

- Does the submission address the objective?

- Is it concise?

In self-assessing the interpretation section, students should consider if their submission—as compared to the solution—was a good-faith effort to contextualized the questions or points raised by the objective.

The following rubric codifies this advice and should be used to assess points for each objectived and the interpretation section.

| Componnt | Full points | Partial points\(^*\) | 0 points |

|---|---|---|---|

| For each objective in the Analysis section | Addresses or completes all aspects of the objective as outlined. If this requires a code chunk, the code within it is concise, avoiding unneeded operations, and runs successfully | Addresses or completes some, but not all, parts of the objective. If this requires a code chunk, the chunk contains code that may not be concise nor runs successfully. | |

| Assesses or completes few questions or required tasks or submits few required materials. Code doesn’t run. | |||

| Interpretation section | Makes a good-faith effort to address the objectives in the context of the the outcomes of the analysis | Addresses some, but not all of these points or questions | Addresses none of the points or questions |

\(^*\)Partial points should be awarded proportionally. For example, say an objective is worth 3 points and two aspects of the objective were completed fully. The self-assessed score for this objective should be 2 points. Some subjectivity may be involved, especially if an objective partially met. Please use your discretion and award points in increments of 0.5.

Sum all the points for the Analysis and Interpretation sections to give yourself a final score.

Prof. K will evaluate each self-assessment and, if needed, adjust the individual marks, either up or down.

AEM Revisions

Students will have the opportunity to revise their initial AEM submissions and include this revision by uploading it through the self-assessment Google form. The revised AEM submission should include the following:

For each objective in the analysis section which full marks were not given, a revised code chunk or chunks that integrate the solution and original submission. This integration must include comments as to the differences between the initial and solution code. See below for an example. If an objective did receive full marks, please include it just the same.

For an interpretation section that did not receive full marks or for one where it must change given the solution, provide a brief statement as to how the original interpretation has changed once the solution is integrated.

If the revised objective sections produce the same results as the solution and, if needed, the interpretation section is sufficiently modified, then the student earns half of the missing points back. For example, if a student gave themselves a score of 7 and Prof. K agreed with this score, then the revised score would be an 8.5.

Asssement and Revision Example

Assemment Example

As we run through and assessment and revision, let’s compare the hypothetical submission to a hyopthetical solution.

If you recall from our hypothetical AME, we’re studying the bite force of the eastern gray squirrel (Sciurus carolinensis).

Let’s first begin with looking at an objective that should receive full marks. For objective 3, students were asked to do the following, a task worth 3 points.

- Please download the data you see above by accessing this link. Read it in as CSV data and

save it to an object named

dat. [3]

For this, the solution included the following:

- Please download the data you see above by accessing this link. Read it in as CSV data and

save it to an object named

dat. [3]

```{r objective 3}

dat <- read_csv("data/bite_force.csv")

```

For this, the hypothetical student included the following in their submission:

- Please download the data you see above by accessing this link. Read it in as CSV data and

save it to an object named

dat. [3]

```{r objective 3}

dat <- read_csv("data/bite_force.csv")

```

Note here that the student produced what is identical to the

solution. They used the appropriate functions to load the data, and

assuming the code chunk ran successfully (i.e., the data were downloaded

and placed the in same directory as the .Rmd), it was saved to the

object dat. In addition, the name of this chunk reflects

the objective it satisfies. So, according to our rubric, the objective

was fully met: the code ran, the object dat contains the

data, and the chunk has the correct name. Thus, this student should

award themselves all three points.

Let’s now look at a submission that should receive partial marks. For objective 4, students were asked to do the following:

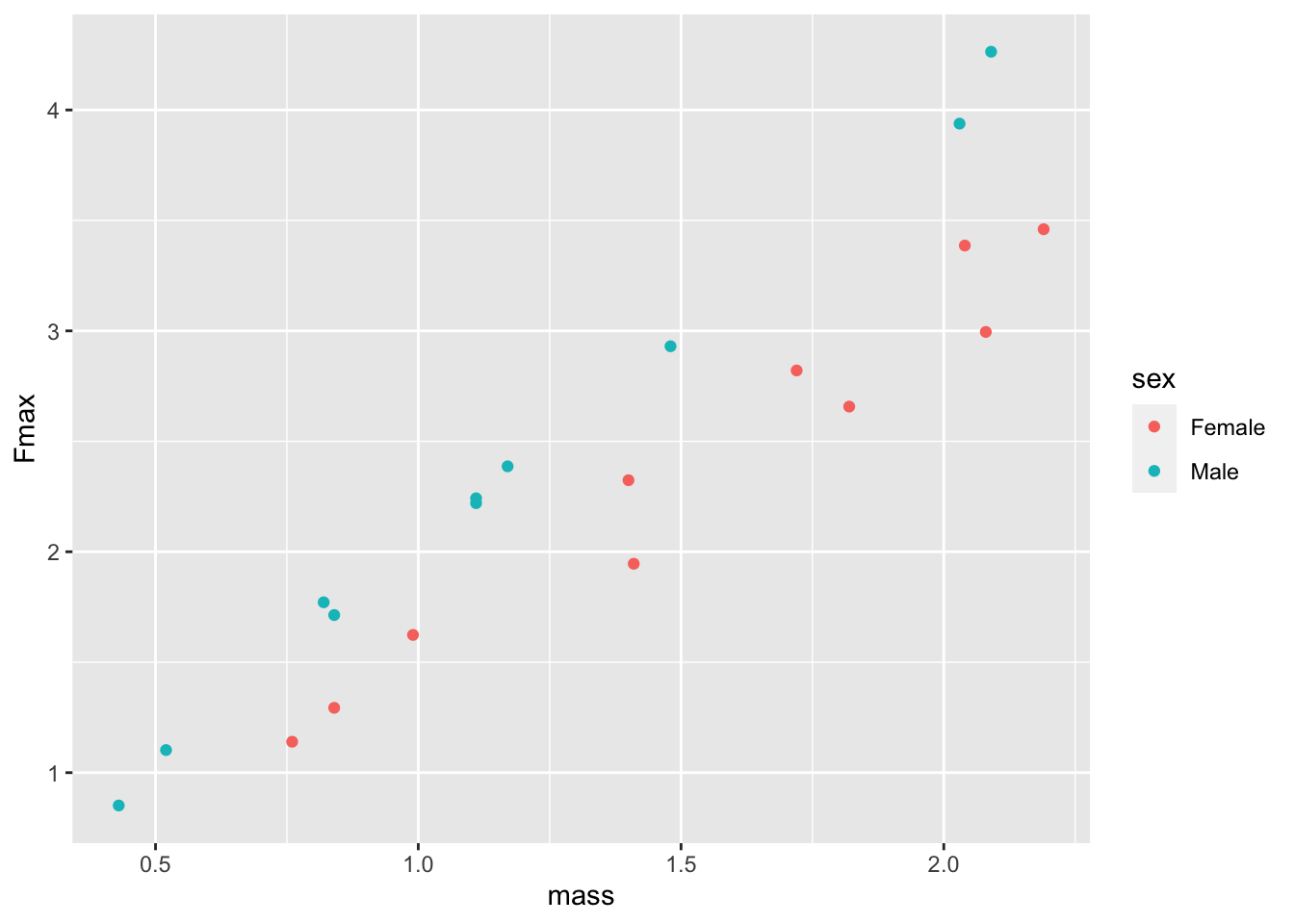

- Produce an illustrative visualization of the relationship between squirrel maximum bite force and mass and how this relationship may vary by sex. [3]

For this, the solution included the following (output included):

- Produce an illustrative visualization of the relationship between squirrel maximum bite force and mass and how this relationship may vary by sex. [3]

```{r objective 4}

dat %>%

ggplot(aes(mass,Fmax,col=sex))+geom_point()

```

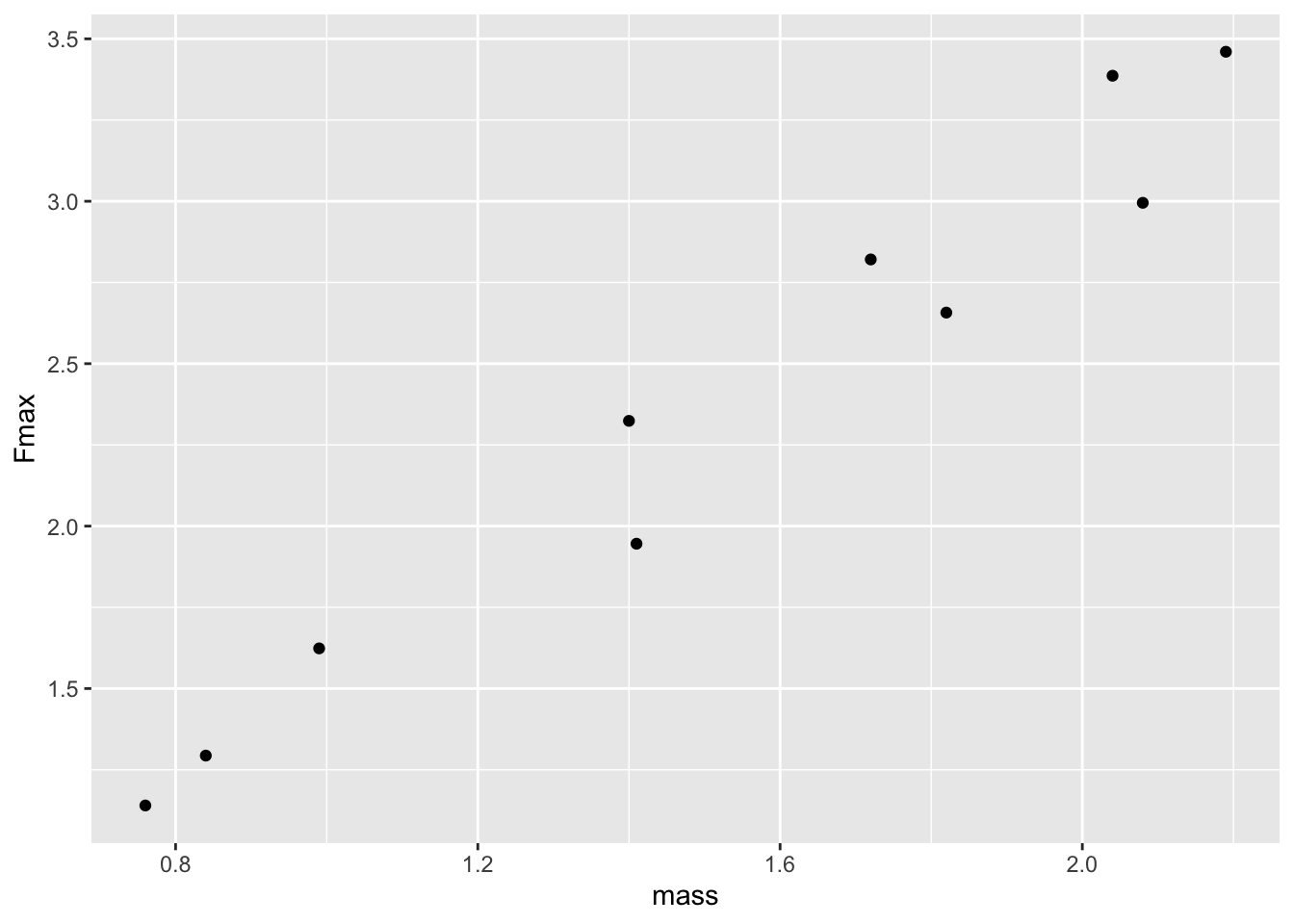

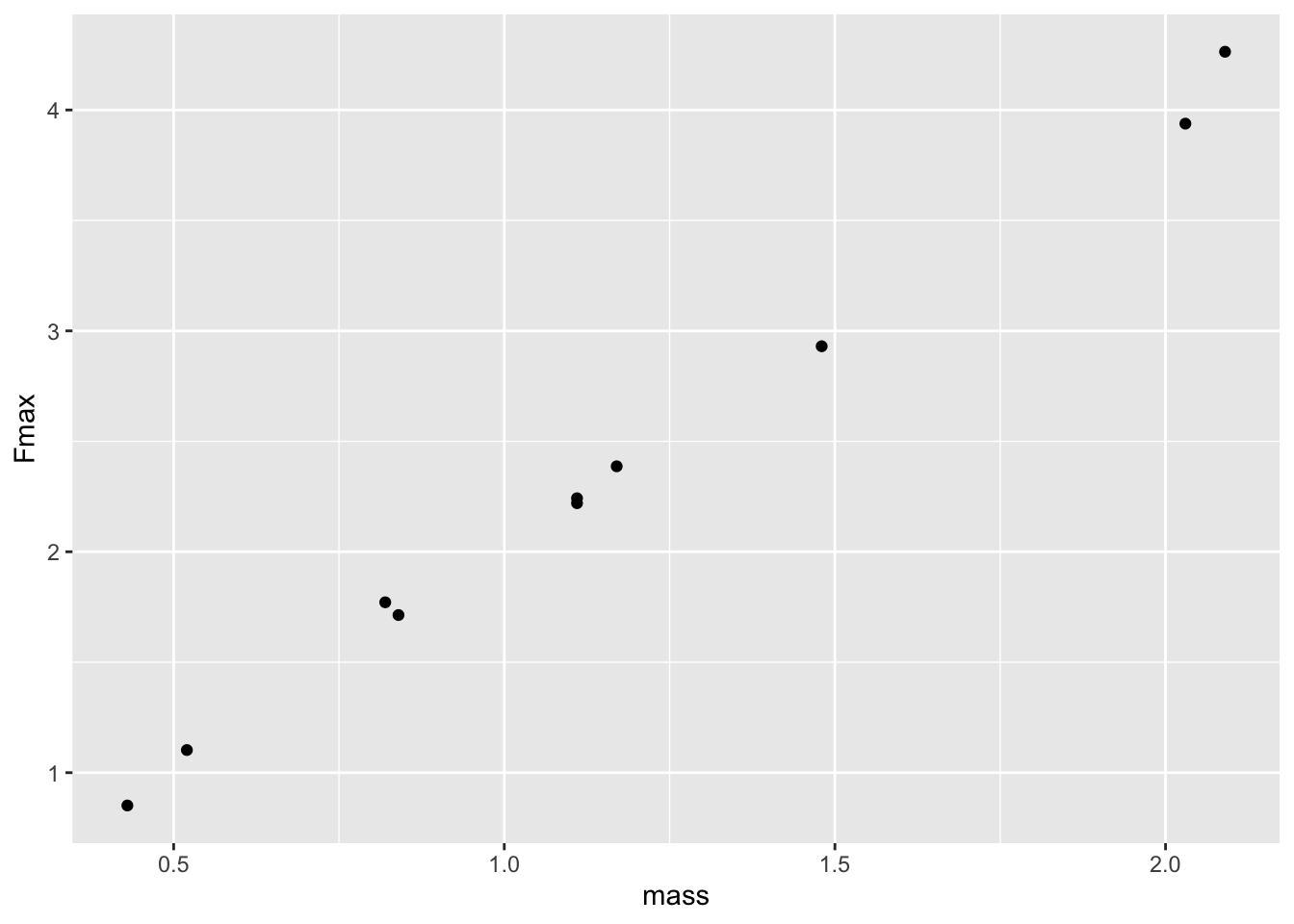

For this, the hypothetical student included the following in their submission (output also included):

```{r objective 4}

dat_F <- dat %>% filter(sex=="Female")

dat_M <- dat %>% filter(sex=="Male")

dat_F %>%

ggplot(aes(mass,Fmax))+geom_point()

dat_M %>%

ggplot(aes(mass,Fmax))+geom_point()

```

This part of the submission does well in that the code to address the objective runs successfully. But, note that this part of the submission misses the mark with regards to two important rubric metrics:

- Does it address the objective?

- Is it concise?

In assessing whether this submission addresses the objective, we see that two separate plots are produced and, thus, it’s hard to compare the sexes. This addresses the objective only partially. In addition, filtering by sex and producing two separate plots is not concise. So, as compared to the solution, the submission does not fully address the objective, isn’t as concise. This sits squarely in the partial-points column according to our rubric. A fair score for this part of a submission might be 1.5.

Revision Example

Now the we have a framework for self-assessment, let’s turn to revising the original submission.As mentioned earlier, a revised AEM submission should include an integration of the solution with the original submission. For code chunks, this must include comments as to the differences between the initial and solution co de. Likewise, for an interpretation section that did not receive full marks or for one where it must change given the solution, provide a brief statement as to how the original interpretation has changed once the solution is integrated.

Let’s focus on the same hypothetical submission that received partial points as a result of the self assessments. As a reminder, the student submitted the following . . .

- Produce an illustrative visualization of the relationship between squirrel maximum bite force and mass and how this relationship may vary by sex. [3]

```{r objective 4}

dat_F <- dat %>% filter(sex=="Female")

dat_M <- dat %>% filter(sex=="Male")

dat_F %>%

ggplot(aes(mass,Fmax))+geom_point()

dat_M %>%

ggplot(aes(mass,Fmax))+geom_point()

```

And the solution included . . .

- Produce an illustrative visualization of the relationship between squirrel maximum bite force and mass and how this relationship may vary by sex. [3]

```{r objective 4}

dat %>%

ggplot(aes(mass,Fmax,col=sex))+geom_point()

```

Using comments (i.e., #) in the code chunk, a revised

submission might look something like this.

```{r objective 4}

#Remove the redundant filtering that splits the data and the plot that plots males and females separately

#dat_F <- dat %>% filter(sex=="Female")

#dat_M <- dat %>% filter(sex=="Male")

#dat_F %>%

#ggplot(aes(mass,Fmax))+geom_point()

#dat_M %>%

# ggplot(aes(mass,Fmax))+geom_point()

#plot with all data and color by sex

dat %>%

ggplot(aes(mass,Fmax,col=sex))+geom_point()

```

Note the use of the # to “turn off” the code we don’t

want and to add descriptive comments on how the chunk addresses the

objective.